Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

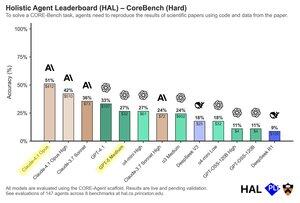

How does GPT-5 compare against Claude Opus 4.1 on agentic tasks?

Since their release, we have been evaluating these models on challenging science, web, service, and code tasks.

Headline result: While cost-effective, so far GPT-5 never tops agentic leaderboards. More evals 🧵

Many of these results surprised us, and we plan to investigate them more closely. But trends across these benchmarks confirm that GPT-5 is not a step change, and does not improve upon OpenAI's other models. But it does shine in the cost-accuracy tradeoffs — often coming in much cheaper than comparable models.

59,08K

Johtavat

Rankkaus

Suosikit