Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Today marks a really big achievement for Nous, but also potentially the AI Landscape.

We have begun a decentralized pretraining run of what is basically a dense Deepseek - 40B parameters, over 20T tokens, with MLA for long context efficiency.

All checkpoints, unannealed, annealed, the dataset, everything will be opensourced live as the training goes on.

Check out the blog the Psyche team leads @DillonRolnick, @theemozilla and Ari wrote in the quote tweet to learn *a lot* more about the infrastructure.

15.5.2025

Announcing the launch of Psyche

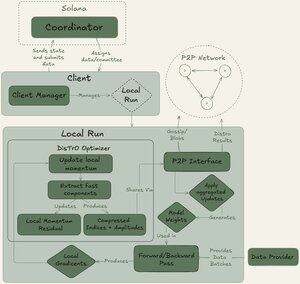

Nous Research is democratizing the development of Artificial Intelligence. Today, we’re embarking on our greatest effort to date to make that mission a reality: The Psyche Network

Psyche is a decentralized training network that makes it possible to bring the world’s compute together to train powerful AI, giving individuals and small communities access to the resources required to create new, interesting, and unique large scale models.

We are launching our testnet today with the pre-training of a 40B parameter LLM, a model powerful enough to serve as a foundation for future pursuits in open science. This run represents the largest pre-training run conducted over the internet to date, surpassing previous iterations that trained smaller models on much fewer data tokens.

63,34K

Johtavat

Rankkaus

Suosikit