Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

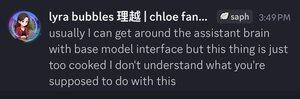

Starting to feel like this gpt oss was trained on like 20T tokens of distilled safe maybe even benchmaxxed data from o3. There seems to be no base model underneath..

Is this phi 5 maxx?

Waiting for @karan4d and @repligate ‘s explorations of it lol

@karan4d @repligate If it is a pure distillation pretraining then there could be no “base model” to release, because there never was one

@karan4d @repligate The model is literally unable to function if not using its chat template? Ive nevrr seen that on a model that was pretrained on raw internet text

60,61K

Johtavat

Rankkaus

Suosikit