Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

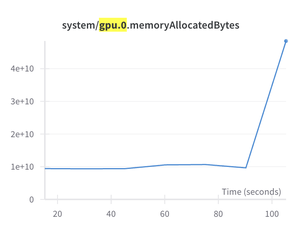

ok, need help! tried finetuning GPT-OSS over the weekend. it works for ~100 steps, then throws a CUDA out-of-memory error

my guess is that every so often, all the tokens get routed to a single expert. then training crashes.

is there an easy fix? never finetuned an MoE before

😒

95,38K

Johtavat

Rankkaus

Suosikit