Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

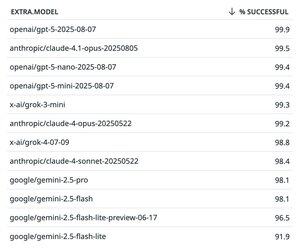

GPT-5 was advertised as reducing hallucinations and it seems like it delivers. 99.5 -> 99.9 is 80% fewer errors.

I don't know why people aren't making a bigger deal out of this. Hallucinations are one of the biggest problems of LLMs and some thought they were unsolvable.

15.8. klo 00.29

After one week, GPT-5 has topped our proprietary model charts for tool calling accuracy🥇

In second is Claude 4.1 Opus, at 99.5%

Details 👇

135,35K

Johtavat

Rankkaus

Suosikit