Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

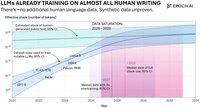

Why GPT 5 feels like a linear advance: In AI, it takes exponential gains in compute and training data to produce linear gains. Compute thrown at training has been rising unsustainably (doubling at 3x Moore's Law pace). Text data for pre-training is almost exhuasted.

GPT5 is a clear improvement. And it should surprise no one that the improvements feel linear - because they largely are. There will be new paradigms that provide step functions and bend the curve (as reasoning did). But the current paradigm shows diminishing returns.

AI is awesome, to be clear. It's an incredible gift. It's already transforming our world. But fast takeoff, exponential progress, recursive-self-improvement narratives run into the diminishing returns / exponential difficulty of moving up those scaling laws.

1,84K

Johtavat

Rankkaus

Suosikit