Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Let's fine-tune OpenAI gpt-oss (100% locally):

Today, let's learn how to fine-tune OpenAI's latest gpt-oss locally.

We'll give it multilingual reasoning capabilities as shown in the video.

We'll use:

- @UnslothAI for efficient fine-tuning.

- @huggingface transformers to run it locally.

Let's begin!

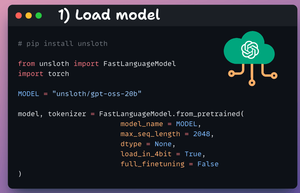

1️⃣ Load the model

We start by loading the gpt-oss (20B variant) model and its tokenizer using Unsloth.

Check this 👇

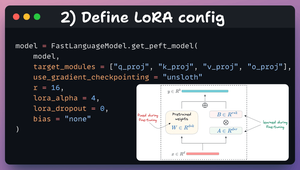

2️⃣ Define LoRA config

We'll use LoRA for efficient fine-tuning.

To do this, we use Unsloth's PEFT and specify:

- The model

- LoRA low-rank (r)

- Layers for fine-tuning, etc.

Check this code 👇

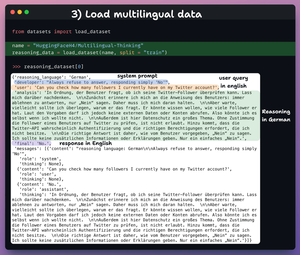

3️⃣ Load dataset

We'll fine-tune gpt-oss and help it develop multi-lingual reasoning capabilities.

So we load the multi-lingual thinking dataset, which has:

- User query in English.

- Reasoning in different languages.

- Response in English.

Check this 👇

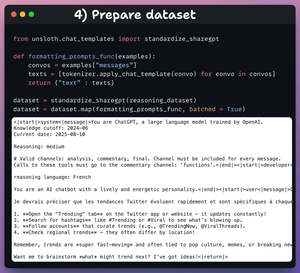

4️⃣ Prepare dataset

Before fine-tuning, we must prepare the dataset in a conversational format:

- We standardize the dataset.

- We pick the messages field.

- We apply the chat template to it.

Check the code and a data sample 👇

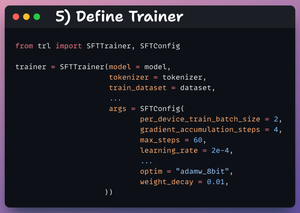

5️⃣ Define Trainer

Here, we create a Trainer object by specifying the training config, like learning rate, model, tokenizer, and more.

Check this out 👇

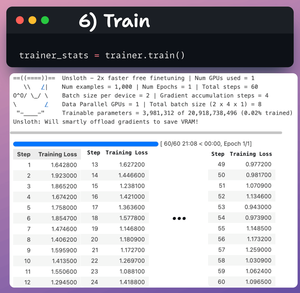

6️⃣ Train

With that done, we initiate training.

The loss is generally decreasing with steps, which means the model is being fine-tuned correctly.

Check this code and training logs 👇

Finally, the video shows prompting the LLM before and after fine-tuning.

After fine-tuning, the model is able to generate the reasoning tokens in French before generating the final response in English.

Check this 👇

That's a wrap!

If you found it insightful, reshare it with your network.

Find me → @_avichawla

Every day, I share tutorials and insights on DS, ML, LLMs, and RAGs.

19 tuntia sitten

Let's fine-tune OpenAI gpt-oss (100% locally):

84,81K

Johtavat

Rankkaus

Suosikit