Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

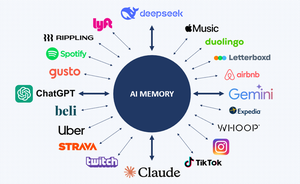

At @finalitycap, we think “Plaid for AI memory” is a massive opportunity. Imagine every app or AI model instantly knowing your context, preferences, interests, and history

This is huge for UX + costs 🧵👇

Props to @binji_x @nikunj @ashleymayer @levie for sparking great ideas:

2/

Today, every AI model keeps your chats siloed. ChatGPT doesn’t know your Claude or Gemini history or even your identity 🪪

Users waste time and money re-explaining themselves. It’s not just frustrating, it’s also costly 💸

3/

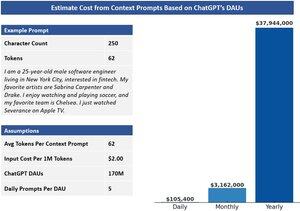

Users waste tokens and money by re-entering context on LLMs like personal info, prefs, etc. in every session

Since LLMs charge by tokens, this inefficiency adds up

At ChatGPT’s scale, repeated context could cost nearly $40M/year in compute alone… here’s the math

4/

AI companies are following the classic big tech playbook: locking user data in walled gardens to maximize profits

More user data = more revenue 🤑

That’s why tech giants built empires on advertising fueled by their users’ info. It’s time to give back control to the users

5/

Memory will be a new moat that AI companies use to lock users in and raise switching costs🌊

We see this unfolding already with OpenAI’s new memory feature

Maybe one day we’ll OAuth with “Sign in with OpenAI” 🔑 but true flexibility is still missing

6/

Lock-in will matter more as different AI models shine in areas like general, search, coding, therapy, etc.

Projects like @layerlens_ai, building AI eval benchmarks on @eigenlayer, will make these differences clear and highlight the true value of portability across models 🔄

7/

Teams working on MCPs let AI models securely access & update user context from many sources but this still needs access to APIs

zkTLS via @OpacityNetwork could unify data from web2 apps including those not accessible via public APIs, while keeping the underlying data private

8/

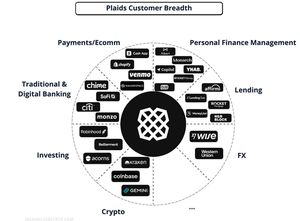

@Plaid transformed web2 fintech by unlocking financial data access and new apps

Plaid for AI Memory could do the same, powering a new wave of AI-driven experiences and applications

12,8K

Johtavat

Rankkaus

Suosikit